|

|

|

Measuring User Experience by Determining Function Usability and Usefulness

Overview

What E-Commerce Web site with a Customer Management section isn't thinking about what new functionality should be added to improve the user experience, or at least, to keep up with or get ahead of the competition? As part of a Sprint Customer E-Management usability study, this question applied. The goal was to prioritize a list of functionality to improve site experineces, as well as to identify specific tasks to target for redesign.

Research Design

The research project was conducted as an in-lab usability study. Participants engaged in a series of tasks at the site. After each task, participants were asked to rate both the usability of the task and the usefulness of the task. Not all participants interacted with all tasks, but the sample was large enough to gather sufficient data across all tasks. Variables and measures included:

- Exploratory v. task directed experiences

- Novice v. advances users

- Task success rates, usability issues, usability and usefulness ratings by task

- Example tasks included:

- Switch to a different plan

- Learn about your phone

- Add the roadside assistance service to your plan

- Find out how to change your phone number

- Check up on your minutes used

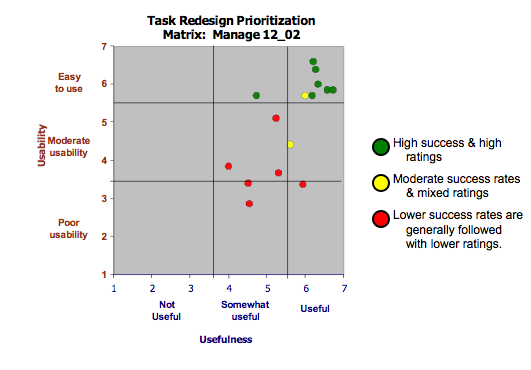

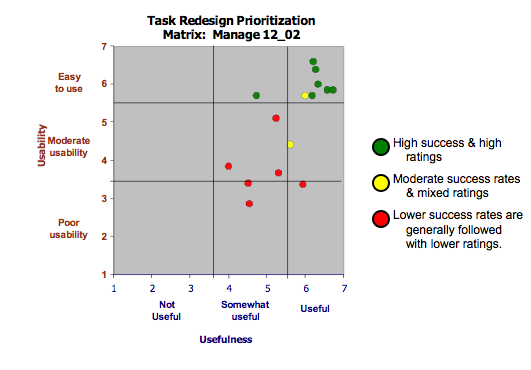

It is useful to note the following about scoring. Refer to the Matrix figure shown below:

- The boundaries of each area may be identified in one of two ways, and it is useful to consider both when using it as a discussion point with decision makers.

-

- Lines can be drawn before the study if there is historical data or other business justifications to do so.

- Lines can be drawn as a function of the results to determine a general categorization of not usable and not useful to very usable and very useful. In this way, low hanging fruit are identified, and tasks or functionality may be targeted appropriately based upon the range of scores. If scores are trending lower overall, for example, decisions may ride more on what functionality or tasks are to be dropped as opposed to fixed.

- It's best to make sure this data is used alongside other analysis. For example, understanding the extent of the usability issues involved in each task, particularly with regards to a function's visibility, is an important contextual data point.

Accomplishments

Multiple data sources were used to identify what specific tasks were elevated into the redesign program. Others tasks were considered for removal. Still others were considered for complete changes in their presentation to the user as small changes were not expected to "move the needle" on both usability and perceived usefulness.

Skills

- Usability Testing

- Experimental Design

- Data synthesis: Understanding how usability issues relate to user ratings

- Persuasion: Helping product managers understand the impacts to their products and when it is advisable to change the way things are presented to the user, or even to drop functionality deemed not useful

Back to Portfolio Highlights

|

More...

UX Measurement Activities

|